Here is the result in plain text:

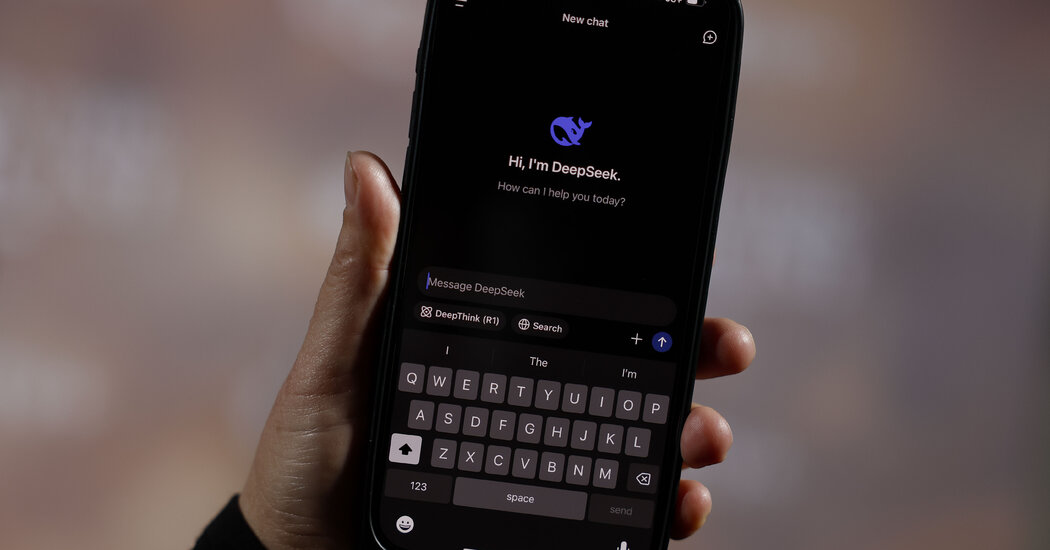

U.S. financial markets tumbled after a Chinese start-up called DeepSeek said it had built one of the world’s most powerful artificial intelligence systems using far fewer computer chips than many experts thought possible. A.I. companies typically train their chatbots using supercomputers packed with 16,000 specialized chips or more. But DeepSeek said it needed only about 2,000.

As DeepSeek engineers detailed in a research paper published just after Christmas, the start-up used several technological tricks to significantly reduce the cost of building its system. Its engineers needed only about $6 million in raw computing power, roughly one-tenth of what Meta spent in building its latest A.I. technology.

The leading A.I. technologies are based on what scientists call neural networks, mathematical systems that learn their skills by analyzing enormous amounts of data.

The most powerful systems spend months analyzing just about all the English text on the internet as well as many images, sounds and other multimedia. That requires enormous amounts of computing power.

About 15 years ago, A.I. researchers realized that specialized computer chips called graphics processing units, or GPUs, were an effective way of doing this kind of data analysis. Companies like the Silicon Valley chipmaker Nvidia originally designed these chips to render graphics for computer video games.

But GPUs also had a knack for running the math that powered neural networks.

As companies packed more GPUs into their computer data centers, their A.I. systems could analyze more data.

But the best GPUs cost around $40,000, and they need huge amounts of electricity. Sending the data between chips can use more electrical power than running the chips themselves.

The company used a method called “mixture of experts.”

Companies usually created a single neural network that learned all the patterns in all the data on the internet. This was expensive, because it required enormous amounts of data to travel between GPU chips.

DeepSeek paired the smaller “expert” systems with a “generalist” system.

The experts still needed to trade some information with one another, and the generalist – which had a decent but not detailed understanding of each subject – could help coordinate interactions between the experts.

Using decimals: Remember your math teacher explaining the concept of pi. Pi, also denoted as π, is a number that never ends: 3.14159265358979 …. You can use π to do useful calculations, like determining the circumference of a circle.

The math that allows a neural network to identify patterns in text is really just multiplication – lots and lots and lots of multiplication. We’re talking months of multiplication across thousands of computer chips.

Typically, chips multiply numbers that fit into 16 bits of memory. But DeepSeek squeezed each number into only 8 bits of memory – half the space. In essence, it lopped several decimals from each number.

DeepSeek also mastered a simple trick involving decimals that anyone who remembers their elementary school math class can understand.

In other words, it requires enormous amounts of risk. “You have to put a lot of money on the line to try new things – and often, they fail,” said Tim Dettmers, a researcher at the Allen Institute for Artificial Intelligence in Seattle who specializes in building efficient A.I. systems and previously worked as an A.I. researcher at Meta.

Many pundits pointed out that DeepSeek’s $6 million covered only what the start-up spent when training the final version of the system. In their paper, the DeepSeek engineers said they had spent additional funds on research and experimentation before the final training run. But the same is true of any cutting-edge A.I. project.

DeepSeek experimented, and it paid off. Now, because the Chinese start-up has shared its methods with other A.I. researchers, its technological tricks are poised to significantly reduce the cost of building A.I.

Source link